A short essay on Automated Testing and why it's useful (in VFX too!)

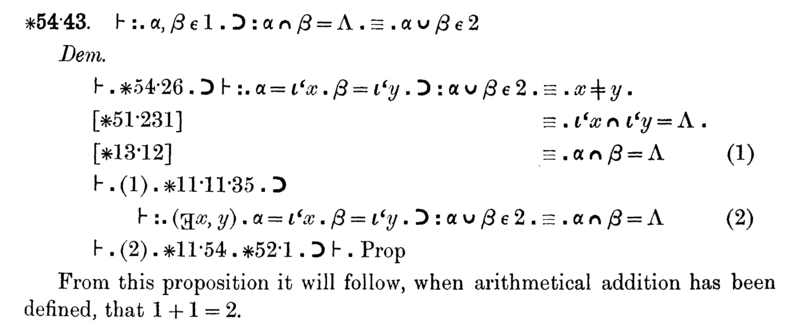

An Extract of the Principia Mathematica, a colossal work by Whitehead and Russell, dedicated to formally proving that 1+1=2. If these guys dedicated a good chunk of their life to prove that 1+1=2, we can dedicate a few hours of our time to prove (test) that our code behaves as intended

Premise

I was recently pinged by the Training department of my studio to have a little practical talk on testing and other python bits. Since I've never really had tech talks in front of a technical audience, I'm writing this article to explore the themes I'm preparing for the actual talk and the various assumptions that people might have.

Assumption 0: You don't really need tests 🍰

This statament is strongly linked with the feeling that

Tests are for people that have enough time and good requirements

which I've come across many times, especially when working in Visual Effects.

The general idea is that tests can only be written in a perfect working environment where you have both a lot of time to write great clean code and you also magically benefit from clear, coherent specs from your clients about what the software they're asking for should accomplish precisely. The takeaway of this assumption is that tests are the cherry on top of an already beautiful cake, and since it's rare to craft such beautiful cakes, we should stop fantasizing about cherries.

This assumption is false, for one simple reason: everyone tests its software. There is also the chance that you are perfect and your code is bug-free, and you get it right the first time just by the sheer magnificency of the abstraction that you have chosen. Even if you are so delusional and believe that you can achieve something like that in a mortal life, such bug-free code means that it’s probably working under incredibly tight condition and very formal requirements: and that is not the case for most industries where programmers operate nowadays. Because most of us operate in businesses where the number one aim is to stay afloat financially, it is very likely that strategic decisions will steer the direction of a software project to places the original programmer could have never imagined.

Hey can we port that 10 years old system for doing X, meant to be working under a totally different software environment, to this other environment we've built recently?

So I expect that one would test its code at least once, potentially manually, before pushing a release to production. Bug-fixes might be exempt, but it's so easy to introduce regressions with a that I expect considerate people would always test their code at least once.

So what Assumption 0 is really against is the fact that we have time to write automated tests.

There’s a very funny expression that I’ve found online, and I'm gonna steal: BDD. That stands for for Bug Driven Development (not to be confused with actual BDD, which is Behaviour Driven Development). This funny form of BDD is basically what we do all the times: we write code, we find a bug, we fix it, we keep on going.

Having already ruled out the idea of writing bug-free code, I think there are three main methods that we can use to find such bugs:

- You let your users find bugs for you

- You manually find bugs while writing and testing your code

- You automatically find bugs while writing your code

I expect most people to be already somehow familiar with #1 and #2, so let's explore the friction in adopting #3.

Why people don’t write automated tests

That is one of the things that has puzzled me for a while. I have seen brilliant, senior programmers writing huge libraries without bothering to write a single unit or integration test. Thousands of lines of code that are used daily by hundreds of people. So it is not just that anyone with a good amount of experience will naturally find writing tests a good and useful activity. I will start from this lovely and realistical description of types of programmers and see why each category doesn't like writing tests, and why they should instead.

Reason 1

In my mind, the 'Duct Tape', 'Anti-programming' and 'Half-assed' programmer all share a similar reason for non writing tests. The duct tape programmer might be against writing tests from scratch if they don't exist, because that's not the point of duct-tape, the point of duct-tape is to just fix stuff. So I can imagine that a duct tape programmer will happily fix broken tests or update them to comply with his latest bug-fix, but will see writing tests from scratch for a new feature as a waste of time. The anti-programming programmer avoids programming in the first place, so it will avoid writing tests him/herself, although it might have a neutral view of tests themselves. The half-assed programmer is probably bothered enough to write code, so I can't imagine he/she making the extra leap of doing extra work in the form of writing tests. That's why I feel that these 3 categories all share this reason:

Writing tests is perceived as a loss of time for no real gain.

Reason 2

On the other hand, we have our toughest types: the 'The theoretical programmer' and 'The OCD perfectionist programmer'. There are variants of both types of programmers, and some variants could be Test Driven Development fanatics. In this case, we're good (not really, but let's skip over them, I can't solve the problems of the universe in a single blog post!). I feel that, generally speaking, other varieties could be against tests because they make the code look less elegant. See, the goal in writing code for them is that they're just making some intellectual abstraction visible through a series of stunningly elaborate typographical choices written in the form of a programming language, and that practice only happens to be incidentally lucrative and useful. Having tests in their code base appears as if their carefully crafter programming choices are not an enough good proof by themselves. Tests might also contributes to make such code-base less 'beautiful', or 'elegant', or 'pure'. This reason can be summarized in

Tests act against their pure efforts to reveal the truth of the universe through code.

Why people should instead write automated tests

Having analyzed why people don't feel the urge to write automated tests, lets explore why each reason can be counter attacked.

Response to Reason 1

Premise

Writing tests is perceived as a loss of time for no real gain.

Response:

It is only faster not to write tests in the short run.

In the long run, a clear test base makes it easy to change the internals of a system without changing the exterior behavior. I could write to incredible lengths here, explaining the particular technicalities involved here, but that would be the material for a whole book. So let's narrow it down to another simple statement: tests are a fast, risk-free way of modeling real world complexities on a smaller scale. Doesn't that statement sound quite familiar? Isn't that the description of programming itself?

I've introduced the adjective fast because it's crucial to understand that attempting to manually reproduce every potential combination that a end-user could perform is an incredibly time consuming task. If we can capture 90% of what a user could do, but in an automated way, that is, a way that is performed by a computing machine, not a human, then we've saved the human some time.

The other interesting adjective is risk-free. Since we're simulating the behaviour of things before they're introduced to the 'real world', any error we encounter has almost no relevance to anyone's life. A frustrating bug is not just an abstract programming error, it actually has an impact on the mood of people and their attitude towards a specific technology, and, in pure business-terms, against the value of your specific software. Ever felt incredibly frustrated at a particularly buggy app that crashes way too often, to the point of wanting to literally throw the computer out of the window? I think it's important not to forget the human component of Human Computer Interactions, even if we're just Duct Tape programmers, because all of us consume somebody else's software, in one way or another.

Response to Reason 2

Premise:

Tests act against their pure efforts to reveal the truth of the universe through code.

Response:

If you don’t write tests, you’re just writing Conjectures, not Theorems

My argument here is that these programmers should get inspired by the mathematics world (that is one level 'purer' than programming, since they mostly work with ideas, not their realization), and try to see tests as 'proofs' to the 'theorems' that they're writing. If we, as software developers, are just writing simple theorems that operate under a specific business environment (a body of knowledge), how can we prove that our theorems are consistent with the other theorems? Axioms are, by definition, unprovable, and is generally the role of the client to provide us with such Axioms. Axioms in a company are constituted by all of the underlying decisions that you don't really have control over, and they might be changed, but their change would create an entirely new body of knowledge (a new product, division, team, company even!). While on the other hand, theorems must be proved through a specific decision procedure. That’s how math progresses, and math is definitely more elegant than programming (in my advice). A theorem without proof is just a conjecture. Conjectures are fascinating, but the real value goes to people that prove conjectures. So, my fellow OCD programmers, if you don't want to just write conjecture, or stipulate new axioms, spend time writing tests that prove that your implementation is sound against a variety of inputs.

Note for math purists: tests and proofs are distant relatives, but they achieve a similar goal: build a solid foundation on which new knowledge can rely upon.

Ok you've convinced me. Where next?

Say that at this point, you feel at least curious to write tests. Where to go next? What constitutes a Good Test™? Can we write tests even if we don't do TDD?

Replying to these questions would probably take a different chapter of a book (if somebody wants to publish it, hit me up!). But I will spend a bit of time here showing how to write decent tests by taking the best way for human beings to learn: negative reinforcement. I will show one example of test that smells, why it smells, and how one would approach making it better.

Vivisecting an example of when tests can smell

Let's have a very practical look at a real world function.

That function is a Unit Test that checks if the find_frames function works as expected.

The example is in python, using pytest, but most of what I want to show can be applied in other contexts too.

For people not in VFX, the word 'frame' might seem a bit obscure, so let me just explain what that is.

Frame: an image that composes a video. Videos at 25FPS are composed by 25 frames per second, that is, 25 images shown in a single second so that our brain is tricked in thinking that there is some movement happening. In Visual Effects we work with frame sequences a lot, because Video formats are a tangled interconnected mess of many frames, while images are a single distilled element with no dependency on other frames, so it's incredibly easier to just work on single images and produce a video from them, instead of working on the video itself.

What the following find_frames(input_path) function is trying to do is just to, given an input path, find frame sequences on disk.

Since this is a very hands-on practical approach to reflect how tests are written in real life, I've searched for a Unit Test for this function. This was the only one I could found in our code base:

@mock.patch("os.path.exists", return_value=True)

@mock.patch("glob.glob")

def test_find_frames_omits_non_digit_frames(mocked_glob, mocked_path_exists):

"""The globing for frames can fail if it picks up files that do not

belong to the sequence that we are looking for."""

mocked_glob.return_value = [

"seq.1001.exr", "seq.1002.exr", "seq.1003.exr",

"seq.other.1004.exr", "seq.other.1005.exr", "seq.other.1006.exr",

]

assert str(find_frames("seq.%04d.exr")) == "1001-1003"

To understand what the scope of the test, lets look back at the docstring, which states the intent:

"""

The globing for frames can fail if it picks up files that do not

belong to the sequence that we are looking for.

"""

Ok so the problem we're testing against is that the glob used by find_frames can sometime pick up less files than we would expect?

But we feed seq.%04d.exr to find_frames and we assert that "seq.other.1004.exr", "seq.other.1005.exr" and "seq.other.1006.exr" are not contained in our returned object..

That seems a bit convoluted to me, but it's probably just because of the word 'fail'. In my head, I interpret the previous statement as

"""

Test that the globing for frames will not pick up files that do not belong

to the sequence that we are looking for.

"""

That is still a bit vague, but IMHO, removing a word which has a slightly subjective connotation helps to better describe the intent of the test.

The fail of someone is the win of somebody else. To me, it kinda makes sense that if I specify "seq.%04d.exr", then "seq.other.1004.exr" will not be part of the resulting sequence. Saying that the function would 'fail' made me go off-track for a bit, because it makes me think that the expectation is that it should succeed instead.

Let's go on with the implementation of the test.

Since the juice of find_frames is a very simple regex used against a path, the author of this test decided that it's fine to mock the filesystem details:

@mock.patch("os.path.exists", return_value=True)

@mock.patch("glob.glob")

I suppose that the argument here is that we don't care about the intricacies of interrogating the OS to retrieve file-like objects, etc., we just trust the os and glob modules to work well, since they're part of the Python standard library. So we tell pytest to 'monkey patch' the internals of os.path.exists to always return True in this case, and glob.glob() to always return the list shown in mocked_glob.return_value defined in the body of the test. That is why the test is just passing "seq.%04d.exr": we don't care anymore that this first argument is an actual existing path on some storage.

Mocking always leaves room for discussion. I've seen tests mocking so much of a test that left me wondering what they were really testing in the end. But here I think the usage of mocking is still reasonable.

If we continue to look at this function, we see that there's something funky going on. mocked_glob is actually used to change the return value, but mocked_path_exists is actually useless, because the return_value is instead defined in the decorator itself. This is not great, but I'd say that it's mostly caused by a hasty change done without double checking everything. So it's funky, but doesn't smell that bad.

What really smells bad to me is this:

assert str(find_frames("seq.%04d.exr")) == "1001-1003"

I suppose that in the mind of the author, the logic was something like:

I know that 'find_frames()' returns an object whose string representation is something like 'firstframe-lastframe' so I'm gonna check against that string representation because that sounds intuitive enough.

Oooof.

I mean I get it, but in my mind that doesn't look like a solid approach for a Unit Test, because there are too steps on which you're implicitly relying, and which you're not testing, even if they are quite important to be sure that find_frames works as expected.

I might see this approach as valuable in a Integration Test, but only if the assumption is that we actually consume that string representation heavily in our code-base. The thing is that we don't.

The object that find_frames returns is a FrameSet, and that is infinitely more useful than its string representation, and that's what most of code that calls find_frames uses instead.

So I would dare to say: let's test against properties of that FrameSet class, instead.

One day, we might decide to update the __str__ of FrameSet, and this would cause this particular test to fail.

But would that mean that the code out there in the real world would fail? No, because 90% of our code doesn't really care about that str representation.

So the only Unit Test that I could find for this functions performs a rather trivial test, which is also not really a guarantee of anything in real life.

If the str representation is such an important detail, then it's better to have a unit test on the FrameSet side of things that tests that something like

assert str(FrameSet(1001,1002,1003)) == "1001-1003"

Then we can have one test for find_frames that does something like:

assert isinstance(find_frames("seq.%04d.exr"), FrameSet)

The idea is that as long as find_frame returns a FrameSet then we're good. If someone changes the __str__ of FrameSet, he/she won't need to update our tests, but only the actual Unit Tests on the FrameSet class itself. That makes sense because it allows to make changes that are more isolated.

What to test, then?

But it's not over.

I feel there's so many more things that could go wrong in the real world usage of find_frames, and that we haven't really touched on.

Here's a few examples:

- What if I feed a non string to

find_frames?

That's the usual 'garbage in' test, especially important in python because of its dynamic nature. What does it return? There's no way to tell it right now. Maybe it fails silently, and returns None? Or maybe it raises an exception? That's such an important part of the behaviour of a function, let's definitely test that!

- What if I feed a well-formed string representing a non-existing path?

- What if the path is a symlink? Does this function follow symlinks automatically?

- What constitutes a valid input abstract path? Can I feed something like

seq.*.*.exr? - Would

seq.%d.exrwork, too?

And so on.. Writing tests is a creative exercise because it trains your imagination. You have to repeatedly ask: what could go wrong here? What are the axioms on which I'm relying, and what are the theorems that I want to prove?

A final note on how to help yourself write Good Tests™

Test Driven Development has got one thing right, in my advice: it's way easier and more efficient to write software that is easy to test, than write software and try to attach tests to it.

Writing everything and then trying to formalize the requirements later can be a painful activity, if you don't have enough experience with tests. I don’t necessarily think that TDD can be viable in every case, but it can be a good friend in some scenarios. There are also other approaches that can help you better design your software abstractions.

One of these is DBC, Design by contract. To make it (incredibly) short, in design by contract you have a precondition that has to be met by your caller, a postcondition that you have to met, and an invariant: stuff you guarantee is not gonna change in the process.

Summarized:

- What does the contract expect?

- What does the contract guarantee?

- What does the contract maintain?

If you look at software in this way, it’s gonna be very easy to write tests that check these 3 conditions.

If it's very hard to write a test for a function/method, either because you can't really pinpoint what the underlying 3 conditions are in that particular implementation or because the code is written in a way that there's no guarantee at all made to the caller, then it's generally a good sign that such code doesn't need tests, it needs a refactor.