Choosing to work with Common Lisp in 2023

Image under Public Domain, but original credits go to Reddit user Anenome5

Tell me why

First of all, why would you still use a programming language first designed in 1960, using an implementation (SBCL) that has been around since at least 1984?

If you do the math and compare it to the pace of web development, it's like sticking with a tool that was written 126 JavaScript frameworks ago (according to this SO article). Imagine doing web development today using something that came before jquery!

My main reason for sticking with 'vintage' tech is that for exploratory programming, Common Lisp and emacs + Sly are incredibly effective, and I've found few things that come as close in terms of interactivity. And exploratory programming is what I do mostly for 'recreational programming', aka 'the coding I do in my spare time', aka creative coding and data visualization.

To Rust or not to Rust

As much as I love Rust, the fight-the-compiler/run/compile/update cycle completely breaks my flow if I'm doing anything where the feedback loop includes a judging a visual component. Even when the compilation step can take as little as a few seconds, that's still miles away from the beautiful <400ms of the Doherty Threshold.

For the same (complementary) reason, I absolutely love Rust whenever I have a tough problem that I need to solve and that doesn't really involve any visual component - I can just keep iterating without having to move away from the 'I am just writing code' zone. There, I get to only run the code when I have finally won my duel with the compiler, and I just have a few (self-inflicted) logic bugs to solve and no type/none errors left. Sadly, none of this flow state happens with Python, the other programming language I use at work, since I have to keep switching between writing the code and running the code to check that it works fine (even after using type hints), which is a mentally painful. And even if you work with a TDD approach, sometimes there's things that you just can't test for from within a Unit Test. Things like static type checkers (eg: pyright) help, but I'm still far away from the experience I get with rust-analyzer. For this reason, I find that Python sits in a weird spot between the 'free-exploration-via-a-REPL' flow that I have with a Lisp and the 'type-now-dont-worry-later' state of Rust.

When writing Common Lisp (CL), 90% of the time I'm just tinkering in a REPL, working on short functions that are generally easy to compose together due to the functional nature of CL. And when I'm working on a whole sketch at once, I can just iterate very quickly on the pieces I need via the beautiful (doom) emacs shortcuts like SPACE+m+e+b (sly-eval-buffer) or SPACE+m+e+f (sly-eval-defun).

This is similar to how I usually work on Python code in a ipython REPL, except that it truly extends to my whole code base, instead of just testing short snippets that lend themselves to the REPL.

Usage tips

Because I'm a complete noob at Common Lisp (and Scheme..), I will be sharing here a bit of lisp-y 'wisdom' as I gather it from the bits and bobs scattered around the web. This is mostly so I have a single place where to go look for things when I need it, although I hope it will prove useful to somebody else out there. I'll keep updating this article as I discover new things.

For practical reasons, I will be focusing on Steel Bank Common Lisp, since that's the implementation that I'm using. I have played around with Chicken Scheme too, and found it to be a pleasent experience, but for me Scheme, as a language, is too bare to be truly useful in my day-to-day (some people call it minimal, I'd call it meagre) - even with the beautiful work that the Chicken team did in terms of libraries and ease of use (csi is the CLI that I wish CL had!).

Note: due to my inexperience in CL some of this might be incorrect, so please let me know in case something I post is particularly wrong.

Performance is an implementation detail

The first lesson I learned is that the HyperSpec of Lisp does not describe the average performance expected from various parts of the language, because that is considered an implementation detail. One might or might not agree with this approach (C++ people probably won't, according to SO user Kaz), but it is what it is. On a practical level though, knowing the time complexity of a function is essential, especially for things like creative coding in real time, so I went looking for something akin to https://wiki.python.org/moin/TimeComplexity but for SBCL, and I couldn't find it. So I'll try to put measures here as I find them.

Lists are expensive!

That might come as a surprise, considering a language that focuses on LISt Processing.

But in most Lisps that I have played with, lists are implemented via singly linked lists, so the time to (append my-list) is O(N), same for computing the length via (list-length my-list). Because most of the time, at least at the beginning, you're just working with lists, things can get slow pretty soon. A common trick to overcome this is to always prepend via cons instead of appending, so that insertion is O(1) instead, then, only at the end, you can reverse the list to use it.

So, for example:

;; We create our list in the opposite order

CL-USER> (defvar my-list

(loop for i in '(9 8 7 6 5 4 3 2 1) collect i))

;; If we need to add stuff, we keep prepending

CL-USER> (setf my-list (cons 10 my-list))

;; We reverse at the end, just before using the list

CL-USER> (print (reverse my-list))

(1 2 3 4 5 6 7 8 9 10)

This is not a purely theoretical 'academic' advice related to a hypothetical situation that you'd never encounter out there in the programming streets; it can actually make a dramatic impact in your day to day.

Just last day I was writing a small creative coding sketch, and it was taking 10 seconds just to create the list of points that I wanted to draw. So I profiled my program and found that most of the time was spent doing appends.

;; Self Total Cumul

;; Nr Count % Count % Count % Calls Function

;; ------------------------------------------------------------------------

;; 1 692 69.2 709 70.9 692 69.2 - SB-IMPL::APPEND2

;; 2 211 21.1 211 21.1 903 90.3 - foreign function syscall

;; 3 62 6.2 62 6.2 965 96.5 - foreign function pthread_sigmask

;; 4 16 1.6 16 1.6 981 98.1 - foreign function __poll

The whole thing was taking 10 seconds to run. After I used the cons+reverse trick it went down to being in the sub second range (I didn't measure it further because it was good enough for what I needed).

Benchmarking

There's a few ways to profile and benchmark CL code, as documented here: https://lispcookbook.github.io/cl-cookbook/performance.html.

For quick "how long did this take" measurements, this is enough:

(time my-func)

But for proper profiling, I have found sb-sprof:with-profiling to be incredibly useful.

You will need to (require :sb-sprof) before you can use it.

(sb-sprof:with-profiling (:max-samples 1000

:report :flat

:loop nil)

(my-func))

Compiling a binary via SBCL

So CL it's somewhat weird compared to most programming languages, because you can interact with it through an interpreter, but also compile it down to a single binary.

This is one way to generate a binary:

(sb-ext:save-lisp-and-die "this-will-be-the-name-of-your-final-binary"

:toplevel #'your-top-level-func

:executable t)

Note that as far as I know, there's no easy way to generate a fully static build.

Showcase

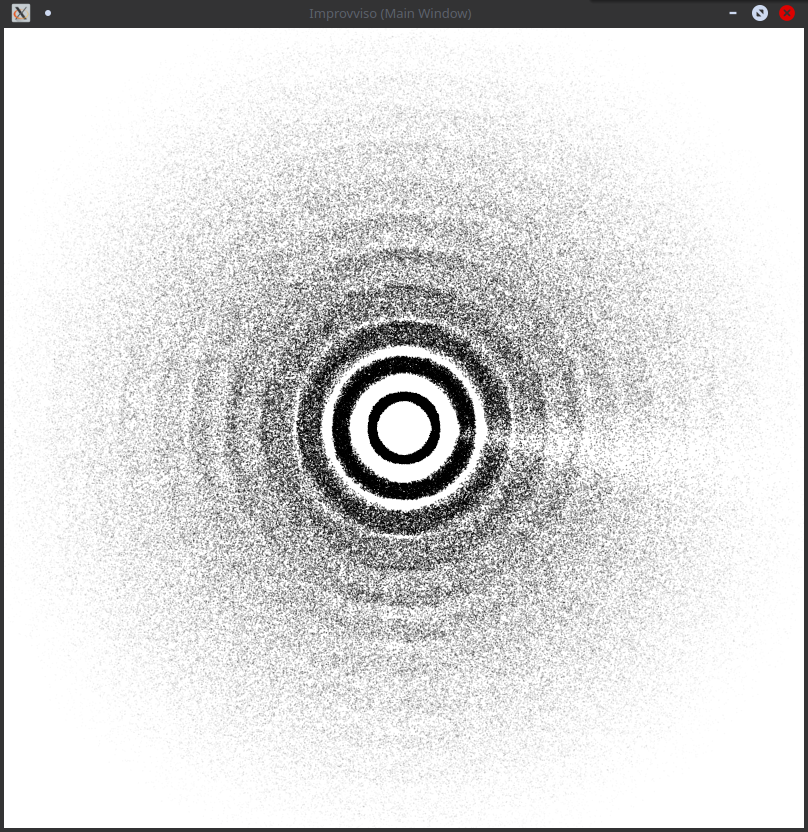

Because words are cool but pictures are cooler, here's some sketches that I've rendered via my custom creative coding framework, which follows a simple client/server architecture where the rendering code is written in Rust (and uses WebGPU) and the client code uses Lisp to send render commands to the Rust server (this model was heavily inspired by this 2017 post by Inconvergent).

Resources

- The Lisp Cookbook: https://lispcookbook.github.io

- Lisp Lang: https://lisp-lang.org/learn/first-steps

- Land of Lisp comic: http://landoflisp.com